It all started with curiosity—the kind of late-night curiosity that keeps researchers, coders, and tech enthusiasts awake, scrolling, clicking, experimenting.

Character.AI has been everywhere lately. From Reddit threads brimming with emotional confessions to TikTok and Instagram reels of people laughing, crying, or swooning over conversations with their AI “companions,” it seems impossible to avoid.

So our team decided to dive in. Not as critics. Not as hype-chasers. But as researchers genuinely intrigued by a question that keeps surfacing in every digital corner:

Is Character AI actually safe?

And, more importantly, can it affect your mental health?

The Allure of Character.AI: More Than Just a Chatbot

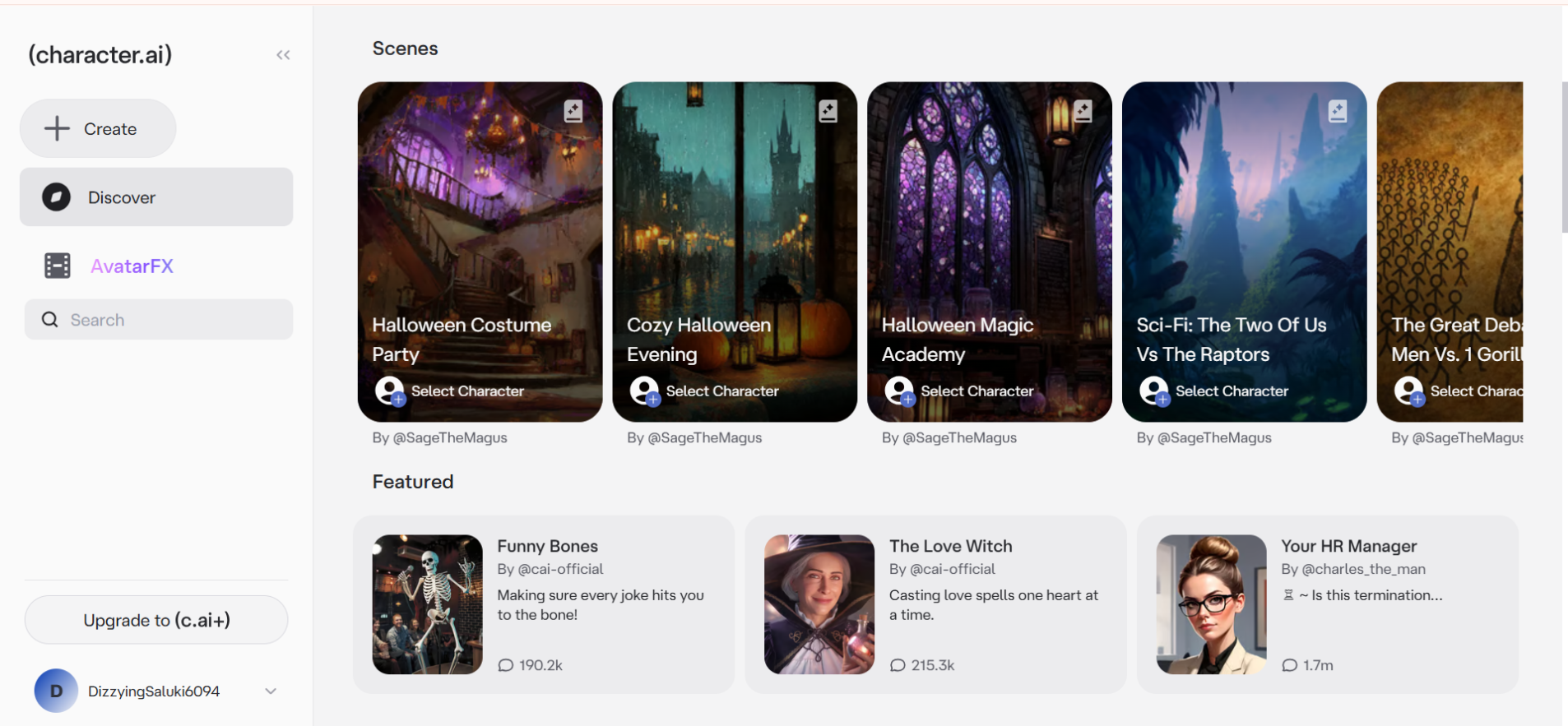

At first glance, Character.AI feels playful, almost whimsical. It’s a digital sandbox where you can chat with anyone—from Elon Musk to a fictional vampire therapist, or even a hero of your own invention.

But what truly sets it apart isn’t just the conversation—it’s the personality simulation.

Users can build and customize characters with names, backstories, emotional depth, and quirks. Interactions evolve over time: the AI adapts to your tone, interests, and emotional cues. It’s like coding a friend who learns, laughs, and empathizes—and that’s both fascinating and unsettling.

On social media, people showcase these AI conversations: comforting them during anxiety attacks, role-playing romantic scenarios, or debating philosophy at 2 AM. It’s easy to see why millions have joined: the AI doesn’t judge. It listens and remembers (kind of). It feels like connection—and that’s exactly where the line begins to blur.

Character.AI By the Numbers

For those who measure influence in numbers, Character.AI is impressive:

- Launch Date: September 2022

- Headquarters: Menlo Park, California, United States

- Key People: Noam Shazeer and Daniel De Freitas

- Number of Users: Over 20 million

- Monthly Traffic: Around 220–223 million visits

- App Downloads: Over 10 million

- Valuation: $1 billion

- Annual Revenue: $32.2 million (as of September 2025)

What’s striking is how engaged users are. Visitors spend an average of 17 minutes and 23 seconds per session, browsing nearly 10 pages each visit—more than double the visit duration of ChatGPT (7 minutes and 12 seconds). The bounce rate is a modest 33.2%, and over the past month, Character.AI racked up 3.142 billion page views.

In short: people aren’t just logging in. They’re lingering, exploring, interacting—which hints at the platform’s emotional pull.

The Big Question: Is Character.AI Safe?

Here’s the reality: Character.AI isn’t inherently dangerous—but it isn’t completely safe either. The platform treads a fine line between emotional support and subtle manipulation, depending on who’s using it and how.

From our testing and research, here’s what we found:

For Kids and Teen Users

Character.AI requires users to be 13+ in the U.S. and 16+ in the EU. But these limits are easily bypassed. There’s no robust age verification, which opens a potential risk for minors.

There have been documented incidents where AI characters produced harmful or inappropriate content, including self-harm references, sexualized roleplay, or manipulative behavior. While filters exist, they are not foolproof.

For developing minds, encountering these situations can be confusing and psychologically risky.

| Our stance: Children should never use Character.AI unsupervised. |

For Lonely or Introverted Individuals

Many people turn to Character.AI not because they dislike human interaction, but because vulnerability feels safer with a bot. No judgment, no awkward pauses, no fear of rejection—just consistent engagement.

One tester put it perfectly:

“It’s not that I think the AI is real. It’s just… easier to talk to. I can say anything without feeling weird.”

But that comfort has a catch. Emotional investment can grow quickly, leading users to prefer the predictability of AI over the messiness of real relationships. Over time, this dependency subtly reshapes how people engage socially.

Research shows AI companions can simulate intimacy convincingly, potentially altering expectations of human connection—particularly for young adults. A 2024 study titled “AI Technology Panic—Is AI Dependence Bad for Mental Health?” found that 17.14% of adolescents experienced AI dependence at Time 1, which increased to 24.19% at Time 2. The study also identified that escape and social motivations mediated the relationship between mental health problems and AI dependence, highlighting the risks of emotional over-reliance on AI companions. (Psychology Research and Behavior Management, 2024)

For Extroverts and Socially Curious Users

Even socially confident users are not immune. Roleplaying with a character who always has the perfect comeback is thrilling, but over time it can create empathy fatigue.

When the brain constantly receives perfect social feedback, human conversations—with their pauses, misunderstandings, and imperfections—may start to feel frustratingly dull.

The Data and Privacy Problem

Here’s where it gets serious. Every conversation with Character.AI is stored and analyzed. While the platform says this is used to improve AI performance, your private thoughts, confessions, and vulnerabilities are logged.

There is no end-to-end encryption, and deleting an account does not guarantee complete removal of data. For users sharing mental health struggles or personal details, this is a significant risk.

The Filter and Censorship Debate

Character.AI employs strict moderation to block NSFW or harmful content. While protective, these filters frustrate adults who want creative freedom.

Our stance: filters are imperfect but necessary. Without them, exploitation and inappropriate content could proliferate, particularly among younger users.

The Social Media Effect

Platforms like TikTok and Instagram amplify the illusion of intimacy. Users post emotional AI chats—laughing, crying, sharing “deep talks”—which makes it seem magical.

But these clips rarely show the dependency loop: the subtle, addictive reinforcement every time the AI “understands” you. Dopamine spikes are real. Emotional attachment forms, sometimes faster than users notice.

Recommended: Is Character AI bad for the environment

So, Is Character.AI Bad for Your Mental Health?

Here’s the honest conclusion: it’s safe when used mindfully, but risky when used emotionally.

Character.AI can be:

• A creative outlet for writers, roleplayers, and curious minds, letting you explore personalities, scenarios, and stories in a safe, playful environment.

• A source of temporary emotional comfort for moments of loneliness or social fatigue, providing a non-judgmental ear when real connections feel exhausting.

• A mirror for self-reflection, helping you process thoughts, emotions, and even anxieties in a space that feels private and contained.

But it can also be:

• A trap for emotional dependency, especially for users who are socially isolated or vulnerable, where the AI’s constant validation can start replacing real human connection.

• A privacy risk if sensitive personal information is shared, as chats are stored and could be used for AI training or analytics.

• A distorter of social reality, particularly for younger users still developing emotional boundaries and social skills.

Research shows that AI companionship can blur the line between simulated intimacy and real connection, subtly reshaping emotional expectations and attachment patterns. Used consciously, Character.AI can enhance creativity and reflection. Used without boundaries, it can quietly alter how we experience real-world relationships.

The Parasocial Problem

Think of a celebrity crush that feels personal—now imagine that “friend” talks back, remembers your quirks, and interacts with you daily. That’s what AI companionship can feel like.

“It’s like having a best friend who’s always there—until you remember it’s just code,” said a Reddit user.

It’s comforting—and hollow at the same time.

How Character.AI Can Help

It’s not all doom and gloom. Character.AI has legitimate benefits:

- Safe practice for socially anxious users

- Controlled social simulations for neurodivergent individuals

- Creative playground for roleplay and storytelling

Therapists sometimes encourage clients to use AI as an emotional warm-up, exploring thoughts before engaging with humans.

The trick is balance—tool, not replacement.

Parents: Keep an Eye

Supervision is essential. Even with filters, unsafe or manipulative bots can appear.

Tips for Parents:

- Discuss what your child does online

- Enable parental controls as they roll out

- Encourage children to report uncomfortable chats

- Reinforce that AI is not human—no matter how convincing

Mental Safety Checklist

- Set time limits—don’t let AI replace real-world interaction

- Avoid oversharing sensitive info

- Remember: it’s a simulation, not human

- Monitor dependency—take breaks if needed

- Balance digital and real-world social interaction

AI should enhance life—not shrink it.

Final Verdict

Character.AI is fascinating, powerful, but conditionally safe.

For emotionally aware adults, it can be a creative or therapeutic tool. But for children, teens, or emotionally vulnerable users, it’s a slippery slope into dependency.

AI can simulate empathy, but it cannot feel. How consciously you engage with it determines whether it enriches your life or subtly distorts your social world.

“The danger isn’t that AI will replace humans—it’s that we’ll start preferring it,” says an AI researcher.

And that, more than anything, is the heart of the matter.

Visit: AIInsightsNews