Grief has always reshaped how people speak, remember, and search for comfort. What has changed is where those conversations now take place. Increasingly, they happen inside chat windows.

People talk to AI companions late at night. Some use journaling bots to calm panic. Others experiment with griefbots built from a loved one’s voice or messages. These tools promise presence at a time when absence feels unbearable.

That raises a difficult and emotionally charged question:

Can AI companions help with mourning, or do they interfere with the psychological work of grief?

This guide examines that question using established grief psychology, emerging research from 2023 to 2026, and real clinical concerns. It does not treat AI as a cure. It treats it as a tool—one that can help in narrow ways and harm when misused.

How Psychology Understands Grief Today

Grief is not a single emotional reaction. It is a long-term process of adapting to irreversible loss.

Contemporary psychology has moved away from rigid stage models. Instead, it emphasizes flexibility, fluctuation, and meaning-making. People do not “progress” neatly. They move back and forth.

One framework is especially relevant when evaluating AI grief support.

The Dual-Process Model of Grief

The Dual-Process Model of Grief, developed by Margaret Stroebe and Henk Schut (1999), remains one of the most influential models in grief psychology.

It describes healthy grieving as an oscillation between two coping modes:

Loss-oriented coping

Focusing on the loss itself: sadness, yearning, anger, memories, and emotional pain.

Restoration-oriented coping

Adjusting to life changes: rebuilding routines, learning new roles, reconnecting socially.

Healing does not mean abandoning grief. It means being able to move between these modes without becoming stuck in one.

This distinction matters because some AI tools encourage movement, while others quietly trap users in loss-oriented coping.

AI Companions vs Griefbots: An Important Distinction

Not all grief-related AI works the same way. From a psychological perspective, the difference is critical.

AI Companions

AI companions can support mental health in grief and are general conversational systems used for emotional support, reflection, or grounding. They are not trained to simulate a deceased person.

People use them to:

-

Calm anxiety

-

Talk through emotions

-

Journal verbally

-

Reduce nighttime distress

In clinical terms, these tools function as emotion-regulation aids.

Examples of popular AI companions include Replika AI and Character AI Pipsqueak.

Griefbots (Memorial AI)

Griefbots are trained on a deceased person’s digital footprint—texts, emails, voice notes, videos—and simulate conversations as that person.

Research published between 2024 and 2025 has raised concerns that this form of “externalized dialogue” can interfere with acceptance of irreversibility, particularly when used early or excessively.

For vulnerable users, the risk profile is significantly higher.

Why People Turn to AI Grief Support

The appeal of AI during grief is not novelty. It is availability.

People often turn to AI because:

-

Emotional distress peaks when others are asleep

-

Friends may feel uncomfortable discussing loss repeatedly

-

Grief lasts longer than social patience

-

Therapy is costly or delayed

-

They want to speak freely without managing others’ emotions

AI offers an immediate, judgment-free presence, which can provide temporary relief, and research on how AI companions affect loneliness shows that this effect is real. Yet availability alone does not equal healing.

Where AI Can Help With Mourning

When used intentionally and with limits, AI can support certain aspects of grieving.

Emotional Regulation During Acute Distress

AI-assisted mental health tools have been studied extensively over the past decade. Randomized and observational studies (2023–2025) on tools like Woebot and Wysa show consistent benefits for:

-

Reducing acute anxiety

-

Supporting cognitive reframing

-

Helping users name emotions during distress

In grief, this kind of support can stabilize overwhelming moments. It does not process the loss, but it can prevent emotional flooding.

Facilitating Emotional Expression

Many people struggle to articulate grief aloud. AI can serve as a neutral space for:

-

Journaling thoughts

-

Writing letters to the deceased

-

Describing memories

-

Rehearsing difficult conversations

From a psychological standpoint, expression reduces internal pressure. Even when the listener is non-human, the act of organizing emotion matters, and strategies for setting healthy boundaries with your AI companion can help ensure this support remains safe and constructive.

Psychoeducation About Grief

AI can help normalize experiences that often alarm grieving people:

-

Sudden emotional waves

-

Numbness

-

Guilt during moments of relief

-

Feeling “behind” others

This aligns with psychoeducational approaches commonly used in grief counseling, and research on the psychology behind AI attachment highlights how AI can influence emotional regulation and relational patterns

Where AI Falls Short—and May Cause Harm

Despite these benefits, AI has limits that matter deeply in mourning.

No Reciprocal Attachment

Healthy human relationships involve mutual vulnerability, boundaries, and emotional risk.

AI offers none of these. Psychologists refer to this as an attachment asymmetry: the human invests emotionally, while the AI cannot. Research from Waseda University (2025) found that constant availability can foster anxious attachment patterns in some users.

In grief, when attachment systems are already activated, this imbalance can reinforce AI companion dependency.

Difficulty Supporting Acceptance

One of the core tasks of grief is accepting that the loved one will not return. Studies published in 2025 on memorial AI suggest that prolonged simulated interaction may delay this acceptance, especially when the AI speaks as the deceased.

Remembrance supports healing, while the illusion of presence often does not, as seen with Digital Resurrection AI.

No Shared Meaning

Grief is relational. Healing often happens through shared memory, ritual, and mutual acknowledgment of loss. AI can listen. It cannot share loss.

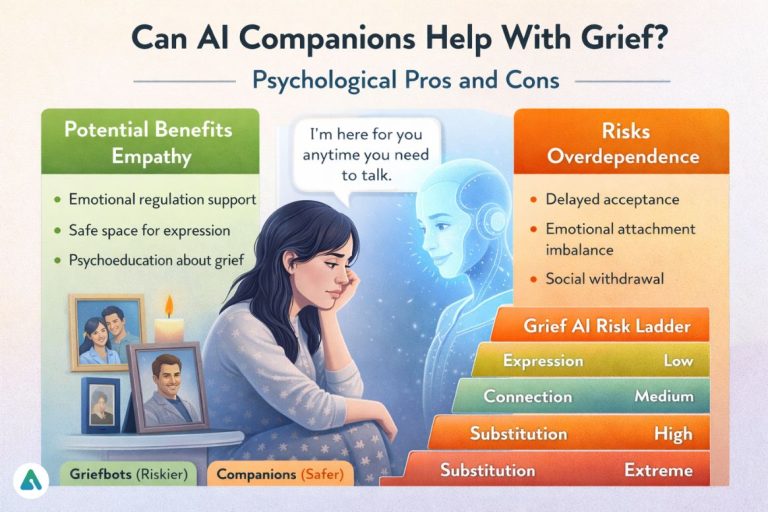

Grief AI Risk–Benefit Matrix

| Purpose | AI Tool Type | Psychological Impact | Risk Level |

|---|---|---|---|

| Regulation | General AI chatbots | Helps manage panic and emotional overwhelm | Low |

| Expression | AI journaling tools | Supports reflection and memory processing | Low |

| Connection | Griefbots (voice/text) | Comfort or delayed acceptance | High |

| Substitution | Always-on companions | Dependency and social withdrawal | Extreme |

A 4-Layer Framework for Using AI During Grief

This framework helps determine whether AI use supports mourning or replaces it.

1: Regulation (Generally Safe)

Breathing exercises, grounding prompts, and emotional labeling.

2: Expression (Generally Safe)

Journaling, letter-writing, and memory narration without simulation.

3: Meaning-Making (Use With Caution)

Interpreting signs, asking what the deceased “would say,” seeking approval.

This layer risks projection.

4: Substitution (Psychologically Unsafe)

Replacing human contact, avoiding real-world adaptation, maintaining an illusion of presence.

When AI use consistently reaches Layer 4, it interferes with grief rather than helping it.

Warning Signs of Unhealthy AI Reliance During Grief

-

Preferring AI interaction over human contact

-

Anxiety when unable to access the AI

-

Avoiding rituals or remembrance with others

-

Believing the AI understands the deceased better than living people

-

Delaying life changes to preserve a simulated connection

These are not moral failures. They are indicators that grief needs human containment.

This can influence human engagement, including aspects of how AI companions affect loneliness and critical thinking exercises that help maintain perspective.

Digital Legacy and the Risk of Secondary Grief

A growing concern in 2026 is what psychologists call secondary grief—distress caused by digital afterlife decisions.

Major platforms now allow users to designate Digital Legacy Contacts, specifying how data should be handled after death. Clinicians increasingly recommend that these decisions be made before loss occurs.

Without consent, families may face unexpected ethical and emotional consequences when memorial AI is created.

AI Fatigue and the Return to Human Support

An emerging pattern in 2026 is AI fatigue. After prolonged use, many users report a sense of emptiness. The AI responds correctly, but nothing accumulates. There is no shared history, no mutual vulnerability, no growth.

Psychologically, this often pushes people back toward:

-

Support groups

-

Therapy

-

Family conversations

-

Community rituals

This is not failure. It reflects a natural human need for reciprocal meaning.

Case Illustration (Composite)

A man in his 30s lost his partner unexpectedly. He used a general AI companion nightly to manage panic and insomnia.

At first, it helped. Over time, he withdrew from friends, preferring the AI’s predictability. In therapy, the AI was reframed as a regulation tool, not a relationship. Use was time-limited. Human support was gradually reintroduced.

His grief did not disappear. It became livable.

Can AI Companions Cure Loneliness After Loss?

No. AI may reduce the feeling of loneliness temporarily, but loneliness in grief stems from lost shared meaning, not lack of conversation.

Only human relationships can repair that rupture.

Faith, Meaning, and Grief AI

For many people, grief is spiritual as well as emotional. AI systems that answer existential questions with certainty risk overriding personal belief systems or encouraging magical thinking.

Healthy grief allows uncertainty.

FAQs

Q. Can AI help with grief?

AI can help with grief by supporting emotional regulation and expression, such as journaling, grounding, or naming feelings. However, AI cannot replace grief therapy, shared mourning, or human emotional support. Psychologists view AI as a short-term coping aid, not a substitute for real relationships or professional care.

Q. Can AI companions cure loneliness?

No, AI companions cannot cure loneliness. While AI may temporarily reduce the feeling of loneliness, it does not resolve the deeper relational loss caused by grief. Loneliness after loss stems from broken shared meaning, which only human relationships and community support can restore.

Q. Can AI replicate human empathy?

AI cannot replicate human empathy. AI systems simulate empathy through language patterns but do not experience emotions, vulnerability, or moral responsibility. This lack of reciprocal feeling is why AI empathy may feel comforting initially but emotionally limited over time.

Q. Is using griefbots unhealthy?

Using griefbots is not inherently unhealthy, but timing and boundaries matter. Overuse—especially early in grief—may delay acceptance of loss by maintaining an illusion of continued presence. Psychologists recommend caution and time-limited use, particularly with voice or personality-based memorial AI.

Q. What is the hardest death to grieve?

The hardest deaths to grieve often involve sudden loss, child loss, or unresolved relationships. These situations provide little time for emotional preparation and can complicate acceptance, meaning-making, and adjustment, increasing the psychological burden of grief.

Q. What should you avoid while grieving?

While grieving, avoid isolation, emotional suppression, and replacing human connection with simulations. Avoiding real-world support or relying solely on AI or distractions can prolong grief rather than help integrate it into daily life.

Q. Can AI replace grief counseling or therapy?

No, AI cannot replace grief counseling or therapy. Licensed therapists provide human attunement, ethical responsibility, and personalized care that AI systems cannot offer. AI may be used as a supportive tool alongside—not instead of—professional or community-based support.

Conclusion

So, can AI companions help with mourning?

Yes—within limits.

AI can support emotional regulation, expression, and understanding during acute distress. It cannot replace human connection, shared loss, or the psychological work of accepting absence.

Used intentionally, AI can be a temporary bridge. Used without boundaries, it becomes a barrier.

Grief does not need to be optimized.

It needs time, reality, and relationship.

Related: How ADHD Users Are Using AI for Body Doubling in 2026

| Disclaimer: This article is for informational purposes only and is not a substitute for professional medical, psychological, or therapeutic advice. AI companions and grief-support tools may provide emotional support, but they cannot replace therapy, human connection, or clinical guidance. Always consult a licensed mental health professional for personalized care. Use AI tools mindfully, and avoid relying on them as a sole source of support. |